NU-WRF Version 11.5 User’s Guide

Introduction

This Document

This is the NASA Unified-Weather Research and Forecasting (NU-WRF) Version 11.5 User’s Guide. This document provides an overview of NU-WRF; describes how to download, compile, and run the NU-WRF software; and guides porting the software to new platforms.

This document consists of six sections and three appendices:

Introduction is the present introductory section.

NU-WRF System provides general information about the NU-WRF project and its components.

Obtaining Software provides information on obtaining a software usage agreement and the NU-WRF source code.

Building Software describes how to compile the NU-WRF software;

Front-End Workflows describes several front-end workflows that can be employed with the NU-WRF modeling system, ranging from basic weather simulation to advanced aerosol-microphysics-radiation coupling to alternate initialization methods. This includes information on new pre-processors and NASA changes to the community WRF model.

Post-Processors describes several post-processors for visualization and/or verification.

Finally, the FAQ section answers Frequently Asked Questions about NU-WRF, Porting provides guidance on porting NU-WRF to new platforms and Revisions lists the NU-WRF revision history.

Acknowledgments

The development of NU-WRF has been funded by the NASA Modeling, Analysis, and Prediction Program. The Goddard microphysics, radiation, aerosol coupling modules, and G-SDSU are developed and maintained by the Mesoscale Atmospheric Processes Laboratory at NASA Goddard Space Flight Center (GSFC). The GOCART and NASA dust aerosol emission modules are developed and maintained by the GSFC Atmospheric Chemistry and Dynamics Laboratory. The LIS, LDT, and LVT components are developed and maintained by the GSFC Hydrological Sciences Laboratory. The CASA2WRF, GEOS2WRF, GOCART2WRF, LISWRFDOMAIN, LIS4SCM, NEVS, NDVIBARENESS4WRF, and SST2WRF components are maintained by the GSFC Computational and Information Sciences and Technology Office. SST2WRF includes binary reader source code developed by Remote Sensing Systems.

Past and present contributors affiliated with the NU-WRF effort include: Kristi Arsenault, Clay Blankenship, Scott Braun, Rob Burns, Jon Case, Mian Chin, Tom Clune, David Considine, Carlos Cruz, John David, Craig Ferguson, Jim Geiger, Mei Han, Ken Harrison, Arthur Hou, Takamichi Iguchi, Jossy Jacob, Randy Kawa, Eric Kemp, Dongchul Kim, Kyu-Myong Kim, Jules Kouatchou, Anil Kumar, Sujay Kumar, William Lau, Tricia Lawston, David Liu, Yuqiong Liu, Toshi Matsui, Hamid Oloso, Christa Peters-Lidard, Chris Redder, Scott Rheingrover, Joe Santanello, Roger Shi, David Starr, Rahman Syed, Qian Tan, Wei-Kuo Tao, Zhining Tao, Eduardo Valente, Bruce Van Aartsen, Gary Wojcik, Di Wu, Jinwoong Yoo, Benjamin Zaitchik, Brad Zavodsky, Sara Zhang, Shujia Zhou, and Milija Zupanski.

The upstream community WRF, WPS, and ARWPOST components are developed and supported by the National Center for Atmospheric Research (NCAR), which is operated by the University Corporation for Atmospheric Research and sponsored by the National Science Foundation (NSF). The Kinetic Pre-Processor included with WRF-Chem was primarily developed by Dr. Adrian Sandu of the Virginia Polytechnic Institute and State University. The community RIP4 is maintained by NCAR and was developed primarily by Dr. Mark Stoelinga, formerly of the University of Washington. The UPP is developed by the National Centers for Environmental Prediction; the community version is maintained by the Developmental Testbed Center (DTC), which is sponsored by the National Oceanic and Atmospheric Administration, the United States Air Force, and the NSF. DTC also develops and maintains the community MET package. The Community PREP_CHEM_SOURCES is primarily developed by the Centro de Previsão de Tempo e Estudos Climáticos, part of the Instituto Nacional de Pesquisas Espaciais, Brazil.

NU-WRF Version 11.5, “Ekman”, is named after Vagn Walfrid Ekman, a Swedish oceanographer best known for his studies of the dynamics of ocean currents. Common oceanographic terms such as Ekman layer, Ekman spiral, and Ekman transport derive from his research.

NU-WRF System

NU-WRF has been developed at Goddard Space Flight Center (GSFC) as an observation-driven integrated modeling system representing aerosol, cloud, precipitation, and land processes at satellite-resolved scales (Peters-Lidard et al. 2015). NU-WRF is intended as a superset of the standard NCAR Advanced Research WRF [WRF-ARW; (Skamarock et al. 2008)] and incorporates:

The GSFC Land Information System [LIS; see (Kumar et al. 2006) and (Peters-Lidard et al. 2007)], coupled to WRF and also available as a stand-alone executable;

The WRF-Chem enabled version of the Goddard Chemistry Aerosols Radiation Transport model [GOCART; (Chin et al. 2002)];

GSFC radiation and microphysics schemes including revised couplings to the aerosols [(Shi et al. 2014); (Lang et al. 2014)]; and

In addition, multiple pre- and post-processors from the community and from GSFC have been collected with WRF and LIS. Taken together, the NU-WRF modeling system provides a framework for simulating aerosol and land use effects on such phenomena as floods, tropical cyclones, mesoscale convective systems, droughts, and monsoons (Peters-Lidard et al. 2015). Support also exists for treating CO\(_2\) as a tracer, with plans to further refine into source components (anthropogenic versus biogenic). Finally, the software has been modified to use netCDF4 (http://www.unidata.ucar.edu/software/netcdf/) with HDF5 compression (https://www.hdfgroup.org/HDF5/), reducing netCDF file sizes by up to 50%.

Recently NU-WRF was incorporated into a separate, Maximum Likelihood Ensemble Filter-based atmospheric data assimilation system (NASA 2016), with the capability of assimilating cloud and precipitation-affected radiances (Zhang et al. 2015). In addition, some secondary, rarely used elements of the community WRF modeling system that are not yet included with NU-WRF may be added in the future.

Components

The NU-WRF package contains the following components and utilities:

The

WRF/component contains a modified copy of the core WRF version 4.4 modeling system [see Chapter 5 of (NCAR 2022)], the WRF-Fire wildfire library [see Appendix A of (NCAR 2022)], the WRF-Chem atmospheric chemistry library [see (Peckham et al. 2022) and (Peckham et al. 2015)], and several preprocessors (REAL, CONVERT_EMISS, TC, and NDOWN). In particular, WRF has been modified to include:couplings between these schemes and GOCART

new dust emission options

a new CO\(_2\) tracer parameterization,

the 2017 Goddard radiation package

This latter package was officially integrated into WRF in v4.2.

WRF/also includes LIS/LDT/LVT (inLISF/) with code modifications to both LIS and WRF-ARW to facilitate on-line coupling between the atmosphere and Noah land surface models, as well as land data assimilation (NASA 2022b).The

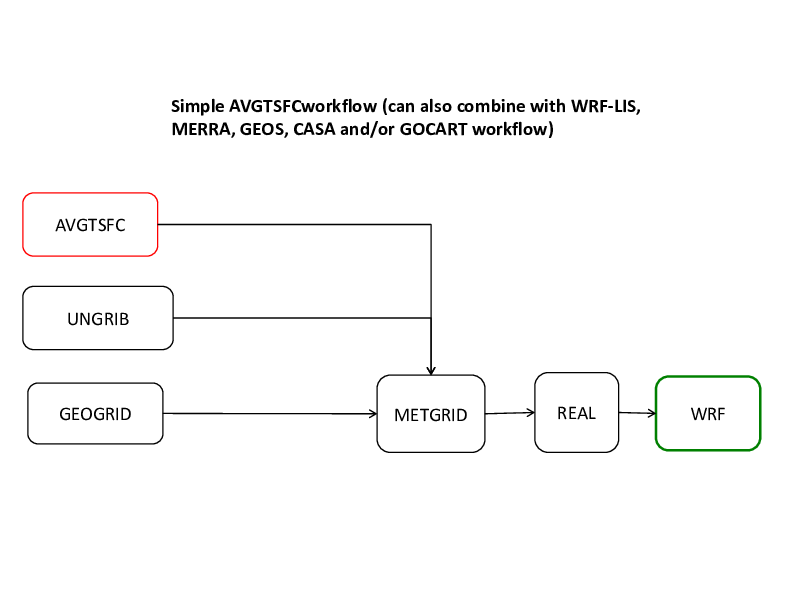

WPS/component contains a modified copy of the WRF Preprocessing System version 4.4 [see Chapter 3 of (NCAR 2022)]. This includes the GEOGRID, UNGRIB, and METGRID programs used to set up a WRF domain, to interpolate static and climatological terrestrial data, to extract fields from GRIB and GRIB2 files, and to horizontally interpolate the fields to the WRF grid. This also includes the optional AVG_TSFC preprocessor, which calculates time-average surface temperatures for use in initializing small in-land lake temperatures.The

LISF/ldt/component contains version 7.4 of the NASA Land Data Toolkit (LDT) software (NASA 2022a). This acts both as a preprocessor for LIS (including interpolation of terrestrial data to the LIS grid and separate preprocessing for data assimilation) and a postprocessor for LIS (merging dynamic fields from a LIS off-line “spin-up” simulation with static data for eventual input to WRF in LIS coupled mode).The

LISF/lvt/component contains version 7.4 of the NASA Land Verification Toolkit (NASA 2022c) for verifying LIS land and near-surface fields against observations and gridded analyses.The

RIP4/component contains a modified copy of the NCAR Graphics-based Read/Interpolate/Plot (Stoelinga 2006) graphical postprocessing software, version 4.6.7. Modifications include support for UMD land use data (from LIS) and WRF-Chem output.The

ARWpost/component contains version 3.1 of the GrADS-compatible ARWpost program for visualization of output [see Chapter 9 of (NCAR 2022)].The

UPP/component contains a modified copy of the NCEP Unified Post Processor version 3.1.1. This software can derive fields from WRF netCDF output and write in GRIB format (see (DTC 2015)).The

MET/component contains version 10.0.0 of the Meteorological Evaluation Tools (DTC 2021) software produced by the Developmental Testbed Center. This can be used to evaluate WRF atmospheric fields converted to GRIB via UPP against observations and gridded analyses.The Goddard Satellite Data Simulator Unit,

GSDSU/(T. Matsui et al. 2014), component contains version 4.0 of the Goddard Satellite Data Simulation Unit (Toshi Matsui and Kemp 2016), which can be used to simulate satellite imagery, radar, and lidar data for comparison against actual remote-sensing observations.The

postproc/GMP/component contains the Goddard MicroPhysics simulator, an end-to-end microphysics development tool. It reads WRF output and conducts microphysics processes for one time step. Thus, it is a very useful tool for independent code development without running a whole NU-WRF simulation. Code can be run either in a single CPU or in parallel, and users can specify input WRF output files and subset domains.The

postproc/GRAD/component contains the Goddard RADiation (GRAD) simulator, an end-to-end radiation development tool. It reads WRF output and conducts radiative transfer for one-time step. Like GMP, it is a very useful tool for independent code development without running a whole NU-WRF simulation and code can be run serially or in parallel.The

utils/lisWrfDomain/utility contains the NASA LISWRFDOMAIN software for customizing LDT and LIS ASCII input files so their domain(s) (grid size, resolution, and map projection) match that of WRF. It uses output from the WPS GEOGRID program to determine the reference latitude and longitude.The

utils/geos2wrf/utility contains version 2 of the NASA GEOS2WRF software, which extracts and/or derives atmospheric data from the Goddard Earth Observing System Model Version 5 [(Rienecker et al. 2008); http://gmao.gsfc.nasa.gov/GMAO_products] for input into WRF. It also contains MERRA2WRF, which can preprocess atmospheric fields from the Modern-Era Retrospective Analysis for Research and Applications [MERRA; (Rienecker et al. 2011)] hosted by the Goddard Earth Sciences Data Information Services Center (GES DISC; http://daac.gsfc.nasa.gov), as well as MERRA-2 reanalyses (Bosilovich et al. 2015) also hosted by GES DISC. These programs essentially take the place of UNGRIB in WPS, as UNGRIB cannot read the netCDF, HDF4, or HDFEOS formats used with GEOS-5, MERRA, and MERRA-2. This includes a python script for downloading and processing MERRA-2 files from the MERRA2 data server (only works in NCCS systems).The

utils/sst2wrf/utility contains the NASA SST2WRF preprocessor, which reads sea surface temperature (SST) analyses produced by Remote Sensing Systems (http://www.remss.com) and converts into a format readable by the WPS program METGRID. This essentially takes the place of UNGRIB as the SST data are in a non-GRIB binary format. This includes a python script for downloading data from the RSS web page.The

utils/ndviBareness4Wrf/utility contains the NASA NDVIBARENESS4WRF preprocessor, which reads gridded Normalized Difference Vegetation Index (NDVI) data and calculates a “surface bareness” field for use by the NU-WRF dynamic dust emissions scheme. Currently, the software reads NDVI produced from the NASA Global Inventory Modeling and Mapping Studies (GIMMS) group and by the NASA Short-term Prediction Research and Transition Center (SPoRT). Both products are based on observations from the Moderate Resolution Imaging Spectroradiometer (MODIS), which is flown on the NASA Terra and Aqua satellites. The preprocessor outputs both the NDVI and the derived bareness fields in a format readable by the WPS program METGRID.The

utils/prep_chem_sources/utility contains a modified copy version of the community PREP_CHEM_SOURCES version 1.8.3 preprocessor. This program prepares anthropogenic, biogenic, wildfire, and volcanic emissions data for further preprocessing by the WRF-Chem preprocessor CONVERT_EMISS. The NU-WRF version of PREP_CHEM_SOURCES uses the WPS map projection software to ensure consistency in interpolation. It also adds support for GFEDv4 biomass burning emissions [see (Randerson et al. 2015)], NASA QFED wildfire emissions (Darmenov and Silva 2013), support for new 72-level GOCART background fields, improved interpolation of the GOCART background fields when the WRF grid is at a relatively finer resolution, and output of data for plotting with the NASA PLOT_CHEM program.The

utils/plot_chem/utility stores a simple NCAR Graphics based PLOT_CHEM program for visualizing the output from PREP_CHEM_SOURCES. This program is only intended for manual review and sanity checking, not for publication quality plots.The

utils/gocart2wrf/utility is a NASA program for reading GOCART aerosol data from an offline GOCART run (Chin et al. 2002), online GOCART with GEOS-5, MERRAero (Kishcha et al. 2014), or MERRA-2 (Bosilovich et al. 2015) files; interpolate the data to the WRF grid; and then add the data to netCDF4 initial condition and lateral boundary condition (IC/LBC) files for WRF. This includes a script for downloading and processing MERRA-2 files from the GES DISC web page.The

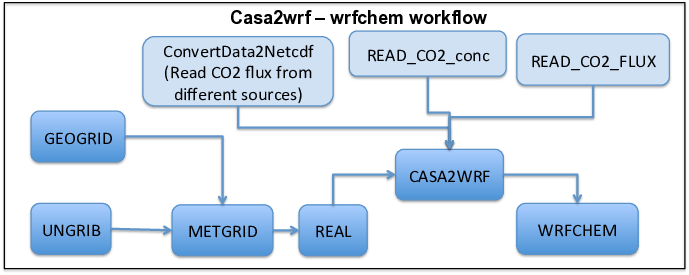

utils/casa2wrf/utility contains the NASA CASA2WRF preprocessor and related software to read CO\(_2\) emissions and concentrations from the CASA biosphere model, and interpolate and append the data to the WRF netCDF4 IC/LBC files (Tao, Jacob, and Kemp 2014).The

utils/lis4scm/utility contains the NASA LIS4SCM preprocessor to generate horizontally homogeneous conditions in a LIS parameter file (produced by LDT), an LDT output file normally generated for the WRF REAL preprocessor, and a LIS restart file. These updated files are part of the initial conditions for NU-WRF (WRF and LIS coupled) in idealized Single Column Mode (see section 5.3).

In addition, NU-WRF includes a collection of scripts to help build, test, and port the framework. These scripts are found under the unified build system written in Python to ease the compilation of the different NU-WRF components and to automatically resolve several dependencies between the components (e.g., WPS requires WRF to be compiled first). The build system is discussed in section 4.3. Furthermore, the NU-WRF build system does not currently support compilation of the 2-D “ideal” data cases nor the 3-D WRF-Fire data case [see Chapter 4 of (NCAR 2022)].

Obtaining Software

Software Usage Agreement

The release of NU-WRF software is subject to NASA legal review and requires users to sign a Software Usage Agreement. Carlos Cruz (carlos.a.cruz@nasa.gov) and Toshi Matsui (toshihisa.matsui-1@nasa.gov) are the points of contact for discussing and processing requests for the NU-WRF software.

Note there are three broad categories for software release:

US Government – Interagency Release. A representative of a US government agency should initiate contact and provide the following information:

The name and division of the government agency

The name of the Recipient of the NU-WRF source code

The Recipient’s title/position

The Recipient’s address

The Recipient’s phone and FAX number

The Recipient’s e-mail address

US Government – Project Release under a Contract. If a group working under contract or grant for a US government agency requires the NU-WRF source code for the performance of said contract or grant, then a representative should initiate contact and provide a copy of the grant or contract cover page. Information should include the following:

The name and division of the government agency

The name of the Recipient of the NU-WRF source code

The Recipient’s title/position

The Recipient’s address

The Recipient’s phone and FAX number

The Recipient’s e-mail address

The contract or grant number

The name of the Contracting Officer

The Contracting Officer’s phone number

The Contracting Officer’s e-mail address

All Others. Those who do not fall under the above two categories but who wish to use NU-WRF software should initiate contact to discuss possibilities for collaborating. Note, however, that NASA cannot accept all requests due to legal constraints.

NUWRF Repository

Current source development is managed in a GitLab repository at (https://git.smce.nasa.gov/astg/nuwrf/nu-wrf-dev). To get access the repository contact Carlos Cruz (carlos.a.cruz@nasa.gov). Once access is granted, the user can obtain the source code using the following command [1].

git clone --recurse-submodules git@git.smce.nasa.gov:astg/nuwrf/nu-wrf-dev.git

develop branch which should be not

only production-ready but also will contain updates and bug fixes.

Developers who wish to collaborate are encouraged to clone the Git

repository and create their feature branches in their local

repositories. Later, these features can be merged into the develop or

master branch, via a pull request. This process requires that the code

pass certain testing and validation criteria and finally be approved

by the NU-WRF core developers. For more information using Git with

NU-WRF see

https://nuwrf.gsfc.nasa.gov/sites/default/files/docs/git-intro.pdf.Tar File

Users can also be provided with a compressed tar file containing the

entire NU-WRF source code distribution.

However, it is strongly recommended that users access the source code using the Git repository.

Also, note that only NU-WRF project members have access to tar files pre-staged on the NASA

Discover and Pleaides supercomputers. Two variants are available: gzip

compressed (nu-wrf_v11.5-wrf46-lisf.tgz) and bzip2 compressed

(nu-wrf_v11.5-wrf46-lisf.tar.bz2). Bzip2 compression generates

slightly smaller files but can take considerably longer to decompress.

To untar, type either:

tar -zxf nu-wrf_v11.5-wrf46-lisf.tgz

or

bunzip2 nu-wrf_v11.5-wrf46-lisf.tar.bz2tar -xf nu-wrf_v11.5-wrf46-lisf.tar

A nuwrf_v11.5-wrf46-lisf/ directory should be created.

Directory Structure

The source code directory structure is as follows:

The

GSDSU/,LISF/,utils/,WPS/, andWRF/folders contain the source codes summarized in the Components section above.ARWpost/,RIP4/,UPP/andMET/components are automatically built on the Discover system. On non-discover systems, users will be prompted to download the component(s) allowing them to be built. Copies ofRIP4/andUPP/, which contain NU-WRF specific enhancements can be provided upon request.The

docs/folder contains reStructuredText documentation to generate this document (indocs/userguide/) as well as tutorial documentation (indocs/tutorial/).The

scripts/folder contains various scripts for various tasks including building NU-WRF and running regression tests.Sample input files for RIP4 are stored in the

rip/subfolder.The

other/subfolder contains scripts that facilitate the installation of the required NU-WRF libraries (see External Libraries and Tools) and a script to set up the correct module environment on supported platforms.Finally, the

python/subfolder contains5 subfolders:A

utils/subfolder with Python scripts to download SST data from NASA SPoRT, SSTRSS data from http://data.remss.com/, and MERRA data from NASA. There are also other Python utilities that can be used in specific workflows.A

build/subfolder with scripts and configuration files used to build NU-WRF including.A

regression/subfolder with scripts and configuration files used to regression test NU-WRF.A

shared/subfolder with scripts shared by other scripts.A

devel/subfolder with a script to generate a release tarball.

The

testcases/folder contains sample namelist and other input files for NU-WRF for different configurations (simple WRF, WRF with LIS, WRF-Chem, and WRF-KPP) used by the regression testing mechanism. Thetutorial/folder contains sample namelist and other input files used for the NU-WRF tutorials (https://nuwrf.gsfc.nasa.gov/nu-wrf-tutorials).

The main directory also includes a small bash script that interfaces with the NU-WRF build system (discussed in

Build System) and a README file.

Building Software

Compilers

The NU-WRF source code requires Fortran 90/2003, C, and C++ compilers.

The development version supports Intel compilers. (ifort, icc,

and icpc) on Discover (version 2021.4.0 ) and Pleiades (version

2020.4.304). GCC (version 12.3 on Discover, 9.3 on Pleiades) is also

required for library compatibility for icpc. The master branch

also works with GNU compilers and OpenMPI on Discover.

External Libraries and Tools

A large number of third-party libraries must be installed before building NU-WRF. Except as noted, the libraries must be compiled using the same compilers as NU-WRF, and it is highly recommended that static library files be created and linked rather than shared object. Included with the NU-WRF source code are scripts (see scripts/other/baselibs.*) that facilitate the installation of all software packages on Discover and Pleiades. The scripts can be adapted to other systems with modifications. The list of libraries is as follows:

An MPI library. (By default, NU-WRF uses Intel MPI on Discover and Pleiades. )

BOXMG 0.1 (Used to build with WRF_ELEC option).

BUFRLIB 11.0

CAIRO 1.14.8

ECCODES 2.23.0

ESMF 8.5.0 compiled with MPI support.

FORTRANGIS 3.0

FREETYPE 2.11.0

FLEX (can use precompiled system binary on Linux).

G2CLIB 1.6.0

GDAL 2.4.4

GEOTIFF 1.5.1

GHOSTSCRIPT 8.11

GRIB_API 1.17.0

GSL 2.3

HDF4 4.2.16

HDF5 1.14.3

HDF-EOS 2.3.0

JASPER 2.0.14

JPEG 9b.

LIBGEOTIFF 1.7.1.

LIBPNG 1.6.37.

NCAR Graphics 6.0.0. (Newer versions of NCAR Graphics require compilation and linking to the CAIRO library, which is currently only supported by MET.)

NETCDF 4.9.2 (C library), NETCDF-Fortran 4.6.1, and NETCDF-CXX 4.3.0.1 (C++ library), built with HDF5 compression.

OPENJPEG 2.5.2.

PROJ 9.3.

PIXMAN 0.40.0.

SQLITE 3.43.02

YACC (can use preinstalled version on Linux).

ZLIB 1.2.11.

In addition to the above libraries you may need to install the curl

library, if not installed on your system. NU-WRF also requires perl,

python3, bash, tcsh, gmake, sed, awk, m4,

and the UNIX uname command to be available on the computer.

The tarballs for the above packages can be obtained from:

https://portal.nccs.nasa.gov/datashare/astg/nuwrf/baselibs/

Build System

Each component of the NU-WRF modeling system has a unique compilation mechanism, ranging from simple Makefiles to sophisticated Perl and shell scripts. To make it easier for the user to create desired executables and to more easily resolve dependencies between components, NU-WRF includes a build layer that glues together the entire framework. The build layer consists of a Python3-based script system configured by a config Python file, basically a text file with a particular structure readable by Python, and used to define the build environment, library paths and the build options.

The build system is shipped with the code and is found in the

scripts/python/build subfolder. It includes a configuration file

nu-wrf.cfg that contains settings for Discover and Pleiades but can

be easily configurable for other systems. The settings in the

configuration file allow users to specify configure options for the

ARWPOST, RIP4, UPP, WPS, and WRF components, as well as template

Makefile names for the GSDSU, LVT, and MET components. Not all

components need a configuration (e.g. utils) but those that do need it

are specified in separate sections. The provided configuration file

should aid in porting NU-WRF to work with new compilers, MPI

implementations, and/or operating systems, a process discussed more

fully in the Porting NU-WRF section.

Invoking the build system is done in the top-level NU-WRF directory by

executing the build.sh script. The build.sh script represents a

thin layer over the Python build system. Running build.sh without

arguments will print a usage page with the following information:

Target. A required argument that defines the NU-WRF component to build. See list of valid targets below.

Installation. The

-p, –prefixflag followed by a path will install all built executables in the specified path. If the flag is skipped,build.shwill install the executables in the model directory.Configuration. The

-c, –configflag followed by the name of a configuration file specifying critical environment variables (e.g., the path to the netCDF library). Users may develop their own configuration file to customize settings (see Porting NU-WRF). If the config flag is skipped,build.shwill default tonu-wrf.cfgwhich works on Discover and Pleiades systems. The software will exit if it is on an unrecognized computer.Options. The

-o, –optionsflag specifies the target options. Valid options arecleanfirst,debug,rebuild, and/ornest=nwherenis an integer ranging from 1 to 3. Multiple options must be comma-separated, e.g. cleanfirst,debug,nest=2. Note that only one nest option can be specified at a time.The

cleanfirstoption will cause the build system to “clean” a target (delete object files and static libraries) before starting compilation.The

debugoption forces some build subsystems (WRF, WPS, LISF, utils) to use alternative compilation flags set in the configuration file (e.g., for disabling optimization and turning on run-time array bounds checking). This option is currently ignored by other (ARWpost, GSDSU, MET, RIP4, UPP) NU-WRF components.The

rebuildoption is used to re-build the WRF executable - when only WRF code is modified - while skipping LIS re-compilation. It can thus speed up the regeneration of the WRF executable. It has no effect on other components.The

nest=noption specifies compiling WRF with basic nesting (n=1), preset-moves nesting (n=2), or vortex-tracking nesting (n=3). Basic nesting is assumed by default. Note that WRF cannot be run coupled to LIS if preset-moves or vortex-tracking nesting is used. Similarly, WRF-Chem only runs with basic nesting.

The

-eflag generates a file, nu-wrf.envs, that specifies all the NU-WRF configuration variables in a bash-script format. This can be useful for debugging or porting to other systems.Targets. At least one target is required and multiple targets must be comma-separated. The recognized targets are (note that all* and ideal* targets are mutually exclusive):The

alltarget compiles all executables without WRF-Chem.The

allchemtarget compiles all executables with WRF-Chem but without the Kinetic Pre-Processor.The

allcleantarget deletes all executables, object files, and static libraries.The

allkpptarget compiles all executables with WRF-Chem and including the Kinetic Pre-Processor.The

arwposttarget compiles executables in theARWpost/directory.The

casa2wrftarget compiles executables in theutils/casa2wrf/directory.The

chemtarget compiles executables in theWRF/directory – except for LIS – with WRF-Chem support but without the Kinetic Pre-Processor. The compiled executables include CONVERT_EMISS.The

gocart2wrftarget compiles executables in theutils/gocart2wrf/directory.The

gsdsutarget compiles executables in theGSDSU/directory.The

ideal_*targets compile ideal preprocessors in theWRF/directory. These include:ideal_scm_lis_xy: Produces inputs for running WRF-LIS in Single Column Mode.ideal_b_waveBaroclinically unstable jet u(y,z) on an f-plane.ideal_convrad: Idealized 3d convective-radiative equilibrium test with constant SST and full physics at cloud-resolving 1 km grid size.ideal_heldsuarez: Held-Suarez coarse-resolution global forecast model.ideal_les: An idealized large-eddy simulation (LES).ideal_quarter_ss: 3D quarter-circle shear supercell simulation.ideal_scm_xy: Single column model.ideal_tropical_cyclone: Idealized tropical cyclone on an f-plane with constant SST in a specified environment.

The

kpptarget compiles executables in theWRF/directory – except for LIS – with WRF-Chem and Kinetic Pre-Processor support. The compiled executables include CONVERT_EMISS.The

ldttarget compiles executables in theLISF/ldt/directory.The

listarget compiles the LIS standalone executable in theLISF/lis/make/directory.The

lisWrfDomaintarget compiles executables in theutils/lisWrfDomain/directory.The

lis4scmtarget compiles executables in theutils/lis4scmdirectory.The

lvttarget compiles executables in theLISF/lvt/directory.The

geos2wrftarget compiles executables in theutils/geos2wrf/directory (both GEOS2WPS and MERRA2WRF).The

mettarget compiles executables in theMET/directory.- The

ndviBareness4Wrftarget compiles executables in theutils/ndviBareness4Wrf/directory. The

plot_chemtarget compiles executables in theutils/plot_chem/directory.- The

prep_chem_sourcestarget compiles executables in theutils/prep_chem_sources/directory. The

riptarget compiles executables in theRIP4/directory.The

sst2wrftarget compiles executables in theutils/sst2wrf/directory.The

upptarget compiles executables in theUPP/directory.The

utilstarget compiles all the executables in theutils/directory.The

wpstarget compiles executables in theWPS/directory.The

wrftarget compiles executables in theWRF/directory with LIS-coupling support and without WRF-Chem support.The

wrfonlyis like thewrftarget but without LIS coupling support. Use this option when not using any LISF packages and thus significantly speed up compilation.

One complication addressed by the build system is that the WPS and UPP

components are dependent on libraries and object files in the WRF/

directory. To account for this, the wrf target will be automatically

built if necessary for WPS and/or UPP, even if the wrf target is

not listed on the command line.

A second complication is that the coupling of LIS to WRF requires

linking the WRF/ code to the ESMF and ZLIB libraries. As a result,

the configure.wrf file [see Chapter 2 of (NCAR 2022)] is modified to

link against these libraries. A similar modification occurs for UPP. (No

modification is needed for WPS as long as WPS is compiled with GRIB2

support.)

Finally, if there are dependent components (WRF, WPS, UPP, LIS) that have been built with different compilation flags, compiler versions, or with/without chemistry, that will result in cleaning the associated directories.

The most straightforward way to compile the full NU-WRF system on

Discover or Pleiades is to type ./build.sh all in the top-level

directory. If chemistry is required, the command is

./build.sh allchem (./build.sh allkpp if KPP-enabled chemistry

is needed). To fully clean the entire system, run

./build.sh allclean.

Finally, the user can selectively build components by listing specific targets.

For example, to build the WRF model without chemistry along with WPS and

UPP, type ./build.sh wrf,wps,upp.

LIBDIR_TAG

As discussed earlier (section External Libraries and Tools), a large number of external libraries, or baselibs, must be installed before NU-WRF can be built. More importantly, these baselibs must be compiled using the same compilers as NU-WRF. Thus, the build system parses the configuration file, nu-wrf.cfg, and, if on Discover or Pleiades systems, it uses pre-installed baselibs determined by the value of the LIBDIR_TAG environment variable set in scripts/other/set_module_env.bash. However, when porting the code to a different system and/or using a newer compiler the environment variable must be set by the user in the configuration file.

For more information see Porting NU-WRF.

Front-End Workflows

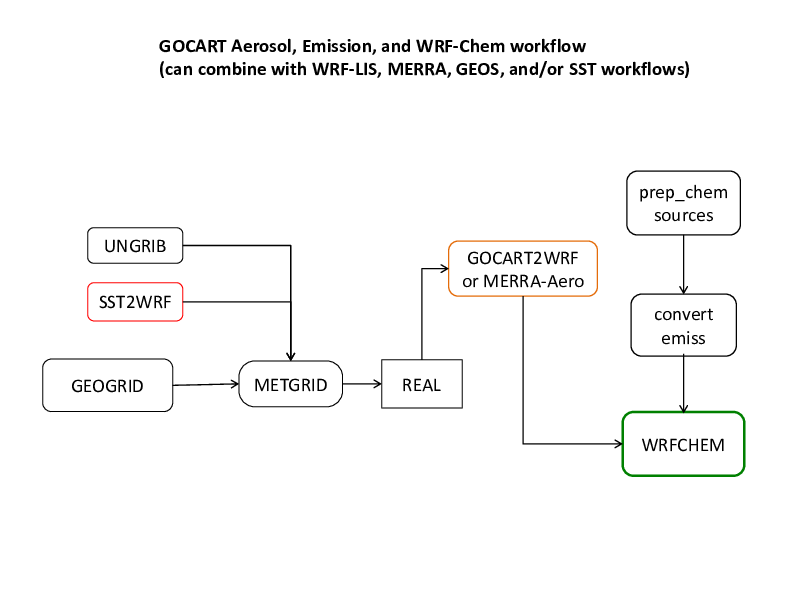

In this section we will summarize several “front-end” workflows involving the main NU-WRF model and different pre-processors. (Post-processing is discussed here). The intent is to illustrate the roles of the pre-processors within the NU-WRF system, and to show several different configurations possible with NU-WRF (e.g., advanced land surface initialization, aerosol coupling, and CO\(_2\) tracer simulation). Finally, note that these workflows can be executed, end-to-end, as defined by regression test templates which are discussed later in this chapter.

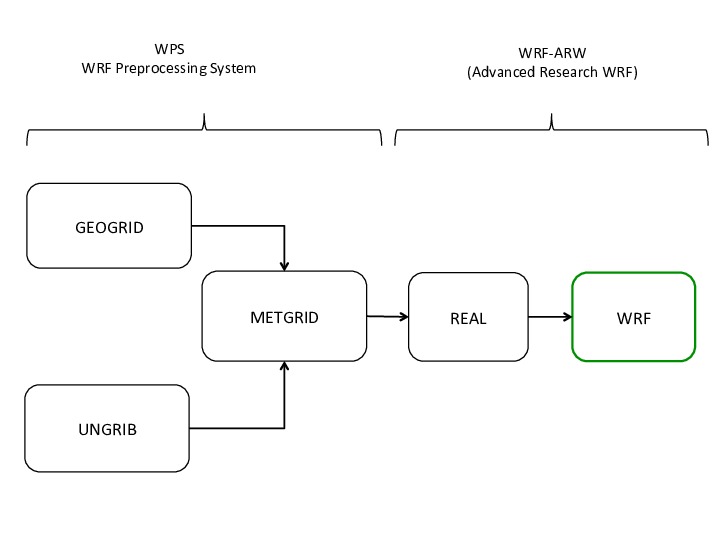

Basic Workflow

This is the simplest approach to running simulations with NU-WRF.

Neither chemistry nor advanced land surface initialization are used,

so the user should compile NU-WRF with ./build.sh wrf,wps.

WPS: The user must edit a

namelist.wpsfile to customize the WRF domains, set the start and end dates, set the file formats, and provide information on desired terrestrial data and file prefixes. Sample namelist.wps files can be found in theWPS/,defaults/, andtestcases/directories. The user must then run the following programs [see Chapter 3 of NCAR 2022].GEOGRID. This program will interpolate static and climatological terrestrial data (land use, albedo, vegetation greenness, etc) to each WRF grid. The user should use the

GEOGRID.TBL.ARWlocated in theWPS/geogrid/directory to specify interpolation options for each dataset selected innamelist.wps. (AlternativeGEOGRID.TBL.ARW.*files are available for chemistry cases.) The user is also responsible for obtaining thegeog/dataset from NCAR for processing by GEOGRID. Sample run scripts are available in thescripts/directory.link_grib.csh. This script is used to create symbolic links to the GRIB or GRIB2 files that are to be processed. The links follow a particular naming convention (

GRIBFILE.AAA,GRIBFILE.AAB, …,GRIBFILE.ZZZ) that is required by UNGRIB.UNGRIB. This program will read GRIB or GRIB2 files with dynamic meteorological and dynamic terrestrial data (soil moisture, soil temperature, sea surface temperature, sea ice, etc) and write specific fields in WPS intermediate format. If necessary the user is also responsible for obtaining the

GRIB/GRIB2data and store it in an appropriate location. The user must select an appropriateVtablefile inWPS/ungrib/Variable_Tables/to specify the fields to be extracted.METGRID. This program will horizontally interpolate the output from UNGRIB to the WRF domains, and combine them with the output from GEOGRID. The user must select the

METGRID.TBL.ARWfile to specify the interpolation methods used by METGRID for each field.

REAL. This program will vertically interpolate the METGRID output to the WRF grid, and create initial and lateral boundary condition files. REAL is described in Chapters 4 and 5 of NCAR 2022. The user must edit a

namelist.inputfile to specify the WRF domains, start and end times, and WRF physics configurations for REAL. A standard WRF land surface model should be selected for this workflow. No chemistry options can be selected. Samplenamelist.inputfiles are available in thetestcases/directory.WRF. This program will perform a numerical weather prediction simulation using the data from REAL. The user needs to change the

namelist.inputfile for specific run cases. A samplenamelist.inputfile can be found in theWRF/run/directory and intestcases/. WRF is described in Chapter 5 of NCAR 2022. In addition to the normal WRF physics options, the user can specify the new Goddard microphysics (mp_physics=7), and the Goddard 2017 radiation scheme (ra_lw_physics=5 for longwave, and ra_sw_physics=5 for shortwave respectively), all without aerosol coupling. The 2017 radiation scheme requires users to create a symbolic link to one file,WRF/run/BROADBAND_CLOUD_GODDARD.bin, and to put that link in the directory where the model is run.A feature added to NU-WRF is the calculation of mean integrated vapor transport. The user may adjust the time-averaging period for this diagnostic by changing the IVT_INTERVAL flag in the &time_control block of

namelist.input. A value of 0 indicates instantaneous values will be output as the “means”, while positive values indicate averaging time periods in minutes.

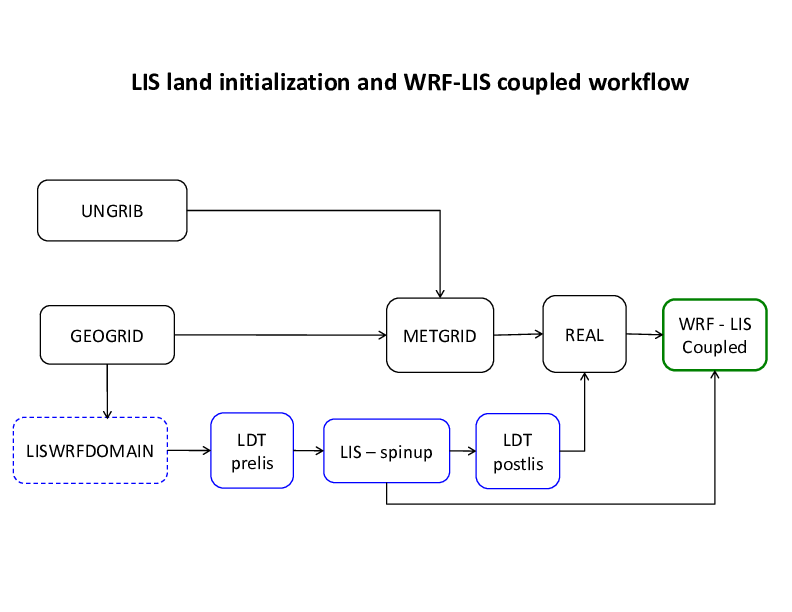

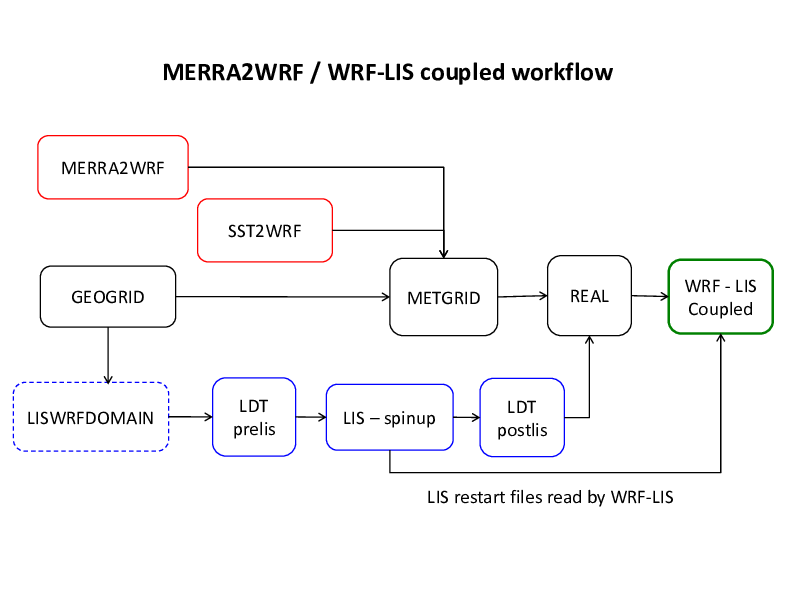

Land Surface Initialization and LIS Coupling

This is a more advanced approach to running simulations with NU-WRF. Instead of using land surface fields interpolated from a coarser model or reanalysis, a custom-made land surface state is created by LIS on the same grid and with the same terrestrial data and land surface physics as WRF. WRF will then call LIS on each advective time step, providing atmospheric forcing data and receiving land surface data (fluxes, albedo, etc) in return.

For simplicity, this workflow uses no chemistry, so the user should

compile NU-WRF with ./build.sh wrf,wps,lis,ldt,lisWrfDomain.

However, an advanced user can combine this workflow with one of the

chemistry workflows described further down; in that case, the user

should replace the wrf target with chem (or with kpp if

using KPP-based chemistry).

WPS. These steps are identical to those WPS steps in the Basic Workflow section above.

LISWRFDOMAIN. The user must provide a

ldt.configfile (used by LDT) and alis.configfile (used by LIS). The LISWRFDOMAIN software will read thenamelist.wpsfile and the netCDF4 output files from GEOGRID, and copy the WRF grid information to the two configuration files. LISWRFDOMAIN is divided into two executables:lisWrfDomain(a Fortran compiled program found inutils/bin/) andlisWrfDomain.py(a Python wrapper script found inscripts/python/utils/).The software can be run as

./lisWrfDomain.py DOMAINPROG LISCONFIG LDTCONFIG WPSDIR, whereDOMAINPROGis the path tolisWrfDomain,LISCONFIGis the path tolis.config,LDTCONFIGis the path toldt.config, andWPSDIRis the directory containingnamelist.wpsand the GEOGRID netCDF4 output files.Sample configuration files are provided in the

testcases/directory.LDT. The user must further customize the

ldt.configfile and a separate parameter attributes file to specify the static and climatological terrestrial data to be processed in “LSM parameter processing mode” [see NASA 2022a]. The following settings are recommended/supported (please refer to the LDT documentation):NoahMP.3.6 is the recommended land surface model;

MODIS is the recommended land use dataset (UMD and USGS are also supported);

SRTM30 is the recommended terrain elevation dataset (GTOPO30 is also supported);

STATSGOFAO is the recommended soil texture dataset;

NCEP monthly climatological albedo and and max snow free albedo are recommended;

NCEP monthly climatological and maximum/minimum vegetation greenness are recommended;

NCEP slope type is recommended;

Use of the “slope-aspect correction” is recommended to improve soil moisture spin-ups; and

ISLSCP1 deep soil temperature with terrain lapse-rate correction is recommended (not using the lapse rate correction could result in warm biases in high terrain).

LIS. The user must further customize

lis.configfor a “retrospective” run. This includes specifying the start and end dates of the “spin-up” simulation, identifying the LDT datasets, specifying the land surface model, and identifying the atmospheric forcing datasets. The user must also customize a forcing variables list file compatible with the forcing dataset, and a model output attributes file. All these files are described in more detail in NASA 2022b. Sample forcing variable and output attribute files are provided in thetestcases/directory.LDT. After running LIS, it is necessary to rerun LDT in “NUWRF preprocessing for real” mode. This requires modifications to

ldt.configto specify the static output file from LDT and the dynamic output file from LIS. Fields from both will be combined and written to a new netCDF output file for use by REAL. Sample files are given intestcases/.REAL. REAL is run similarly to the Basic Workflow configuration, except that it also reads the static and dynamic land surface data collected by LDT. For this to work, the

namelist.inputfile must include an additional namelist block:&lis lis_landcover_type = 2, lis_filename = "lis4real_input.d01.nc" use_lis_noahmp = "yes" /

Here lis_landcover_type specifies the land use system used with LIS and LDT (1 = USGS, 2 = MODIS, 3 = UMD); lis_filename is an array of character strings specifying the combined LDT/LIS files for each WRF domain; use_lis_noahmp specifies that a NOAHMP LSM will be used, otherwise (“no“ option) a regular Noah LSM is assumed; use_lis_urban specifies that urban physics values generated by the LIS framework will be updated (coupled) into the WRF surface driver.

In addition, the user must specify LIS as the land surface model selection with WRF (sf_surface_physics=55).

The resulting initial and lateral boundary conditions will replace the land surface fields from UNGRIB with those from LDT/LIS.

WRF with LIS. Running WRF in this case is similar to the Basic Workflow case, except that WRF will also read the

lis.configfile and the LIS restart files that were produced during the “retrospective” run. The user must modifylis.configto run in “WRF coupling mode”, and specifyforcing_variables_wrfcplmode.txtas the forcing variables list file. The start mode must also be changed to “restart”, and the time step for each LIS domain must match that used with WRF (specified innamelist.input).

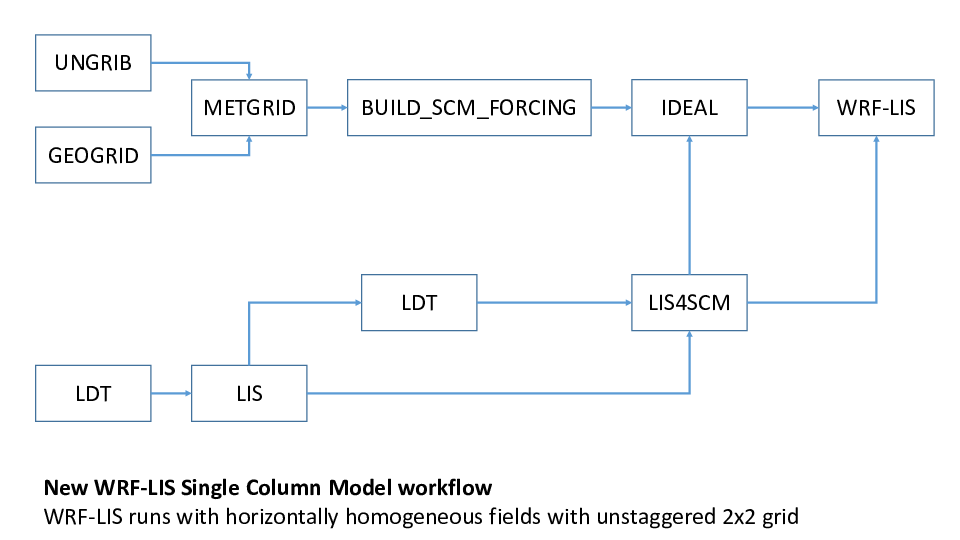

Single Column Model with LIS Coupling

Recently, an idealized configuration for WRF with LIS coupling was added

to NU-WRF: the WRF-LIS Single Column Model (SCM) mode. Based on the

community SCM mode, the WRF-LIS SCM configuration runs with a

\(3 \times 3\) staggered horizontal grid (\(2 \times 2\) for the

unstaggered mass grid) and horizontally homogeneous initial conditions.

Boundary conditions are periodic. To run the WRF-LIS SCM, the system

must be compiled using

./build.sh ideal_scm_lis_xy,wps,lis,ldt,lis4scm, followed by this

workflow:

GEOGRID. Run GEOGRID in a \(3 \times 3\) staggered grid centered on a point of interest. Any normal map projection can be used (i.e., Lambert Conformal, Mercator, or Polar Stereographic), but the spatial grid resolution should be small (\(\sim1\) km) to make the projection approximately Cartesian.

UNGRIB. Run UNGRIB to extract initial conditions from a suitable external dataset.

METGRID. Run METGRID to merge the GEOGRID and UNGRIB output together.

BUILD_SCM_FORCING. Configure and run

build_scm_forcing.bashintestcases/wrflis/scm. This shell script runs thebuild_scm_forcing.nclscript under the hood, which extracts column initial conditions and writes them inprofile_init.txtandsurface_init.txt. (Advanced options also exist to generate forcing data and ensemble perturbations, but these have not been tested yet.)LDT. Run LDT in “LSM parameter processing mode”. Use a \(2 \times 2\) unstaggered grid with the point of interest at the center of the southwest grid box. A latitude-longitude map projection can be used, but the grid spatial resolution should match that of GEOGRID.

LIS. Run LIS in “retrospective” mode using the same grid configuration as LDT.

LDT. Rerun LDT in “NUWRF preprocessing for real” mode.

LIS4SCM. Edit the

namelist.lis4scmfile inutils/lis4scm/input/to enter (1) the name of a LIS restart file generated by the retrospective run, (2) the output file generated by LDT when preprocessing for real, and (3) the parameter file generated by LDT for LIS. Then run LIS4SCM. This program will modify the three data files and make them horizontally homogeneous by copying the values in the southwest corner to the remainder of the grid.IDEAL. Edit a

namelist.inputfile:Make sure to specify a \(3 \times 3\) grid and the same spatial resolution used by GEOGRID.

Make sure to set

io_form_auxinput3to zero in namelist block&time_control(unless using idealized forcing, which has not been tested).Make sure to set the

&lisnamelist block settings to use the output file generated by LDT for real after it has been modified by LIS4SCM.Make sure to set the

&scmnamelist block [see Chapter 5 of NCAR 2022]. Note that the settings for land use, soil type, vegetation fraction, and canopy water are ignored, as these are provided by LDT and LIS.Make sure to set

periodic_xandperiodic_yin the&bdy_controlnamelist block to “.true.”.Make sure to set

pert_coriolisin&dynamicsto “.true.”.Make sure to set

sf_surface_physicsin the&physicsnamelist block to 55 (indicating LIS on-line coupling).

When complete, run the IDEAL program in

WRF/test/em_scm_lis_xy/, using only a single processor. This will produce a wrfinput file.WRF with LIS. Run WRF with the wrfinput file produced by IDEAL and the LIS and LDT file homogenized by LIS4SCM. Make sure to use a single processor. Otherwise, follow the instructions in the above Land Surface Initialization section.

Use of GEOS-5 Meteorological Data

One source of initial and lateral boundary conditions is NASA’s GEOS-5 global model (Rienecker et al. 2008). A number of dataset options exist from GEOS-5, including daily near-real-time simulations (see http://gmao.gsfc.nasa.gov/products), and archived MERRA (Rienecker et al. 2011) and MERRA-2 (Bosilovich et al. 2015) reanalyses (both available from http://disc.sci.gsfc.nasa.gov). GEOS-5 can provide not just meteorological fields (temperature, pressure, wind, and moisture), but also aerosol fields due to the use of the GOCART aerosol module (Chin et al. 2002).

There are several challenges to using GEOS-5 data. First, the GEOS-5 land surface data cannot be used to initialize WRF, due to fundamental differences in the GEOS-5 Catchment LSM (Koster et al. 2000) and those in WRF. Users are therefore advised to use the GEOS-5 data in a workflow that also includes WRF-LIS (see Land Surface Initialization section above). Second, GEOS-5 aerosol data cannot currently be handled by WPS and REAL, as these tools were designed for meteorological fields (temperature, pressure, wind, and moisture). Processing these aerosol fields requires a special workflow described in the Use of GOCART section.

The remaining issues involve the format and organization of the GEOS-5 data. GEOS-5 writes output in netCDF (and historically HDF4 and HDFEOS2) instead of GRIB or GRIB2; GEOS-5 allows user-specification of variables and variable names for different output files, leading to wide variations between simulations; and GEOS-5 often does not output all the variables expected by WPS. To address these issues, special preprocessing software has been developed: GEOS2WRF (a collection of utilities designed for customized processing of GEOS-5 data, including derivation of missing variables), and MERRA2WRF (a monolithic program customized to process 6-hourly MERRA and MERRA-2 reanalyses).

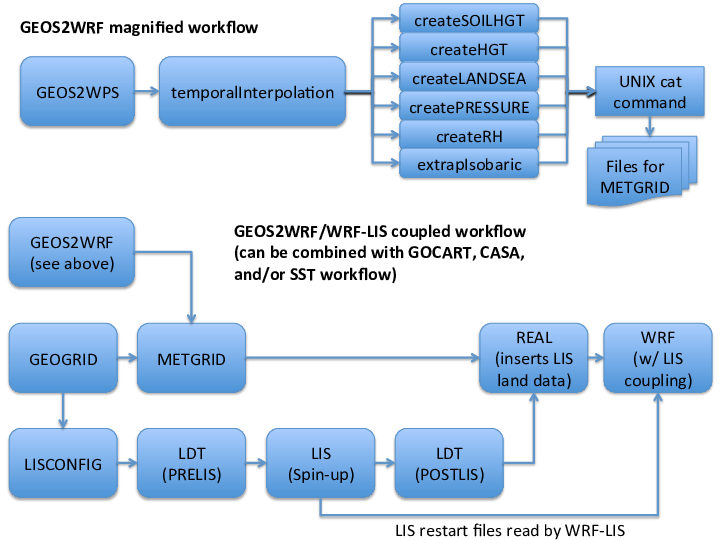

GEOS2WRF

GEOS2WRF can be broken down into four main sub-groups:

Front-end conversion.

- GEOS2WPS. A front-end converter that can read HDF4, netCDF3, and netCDF4 files with GEOS-5 data. A

namelist.geos2wpsfile is read in as input, and must be customized to list the location, name, and format of the GEOS-5 file; the names of the coordinate arrays in the GEOS-5 file; the number of time slices, the indices of the slices, valid times and forecast hours; and the number of variables to process, along with their names, ranks, input and output names, units, and descriptions. This program takes the place of UNGRIB. The output from GEOS2WPS is written in WPS intermediate format [see Chapter 3 of NCAR 2022], with the filename convention$VARNAME_$LEVELTYPE:$YYYY-$MM-$DD_$HH, where $VARNAME is the variable name, $LEVELTYPE is a string describing the type of level the data are on, $YYYY is the 4-digit year, $MM is the 2-digit month, $DD is the 2-digit day, and $HH is the 2-digit hour. Some example output file names are:TT_MODEL_LEVEL:2009-08-25_00# Temperature on model levelsPSFC_GROUND_LEVEL:2009-08-25_00# Surface pressurePMSL_MEAN_SEA_LEVEL:2009-08-25_00# Mean sea level pressureVV_10M_ABOVE_GROUND_LEVEL:2009-08-25_00# 10-meter V windsThe $VARNAMEs (TT, PSFC, PMSL, and VV above) are listed innamelist.geos2wps, and can be customized by the user; however, they must match the values in theMETGRID.TBLlook-up file used by METGRID for those variables to be processed by WPS. (Intermediate variables used to derive other variables for WPS do not have this naming restriction.)

Temporal interpolation.

temporalInterpolation. This program takes WPS intermediate file format data and linearly interpolates in time. This can be used, for example, to interpolate 6-hourly MERRA-2 analyses at 00Z, 06Z, 12Z, and 18Z to 3-hourly intervals for initializing WRF at 03Z, 09Z, 15Z, or 21Z. Only a single, user-specified variable will be processed during a particular program invocation, and other variables in the input data files will be ignored. A

namelist.temporalInterpolationfile is used to specify the variable, input, and output data files.

Variable-derivation. Multiple tools for deriving missing variables are required by WRF from existing variables. These should be used on an as-needed basis depending on the contents of the GEOS-5 files. Current programs that are in this category are:

createSOILHGT. A utility that reads in a WPS file with surface geopotential, and calculates the surface terrain field. The output WPS file will be named

SOILHGT_GROUND_LEVEL:$YYYY-$MM-$DD_$HH. Anamelist.createSOILHGTfile is also used as input.createHGT. A utility that reads in a WPS file with model layer pressure thicknesses, model layer temperatures, model layer specific humidity, and the model terrain field, and derives the geopotential heights on the GEOS-5 model levels. The output WPS files will be named

HGT_MODEL_LEVEL:$YYYY-$MM-$DD_$HH. Anamelist.createHGTfile is also used as input. This program is not needed when processing isobaric levels.createLANDSEA. A utility that reads in a WPS file with “lake fraction” and “ocean fraction” and derives a land-sea mask. The output WPS files will be named

LANDSEA_GROUND_LEVEL:$YYYY-$MM-$DD_$HH. Anamelist.createLANDSEAfile is also used as input.createPRESSURE. A utility that reads in a WPS file with model layer pressure thicknesses, and calculates the (mid-layer) pressures. The output WPS files will be named

PRESSURE_MODEL_LEVEL:$YYYY-$MM-$DD_$HH. Anamelist.createPRESSUREfile is also used as input. This program is not needed when processing isobaric levels.createRH. A utility that reads in a WPS file with either model or isobaric level temperatures, specific humidity, and pressure, plus optional surface pressure, 2-meter temperature, and 2-meter specific humidity, and derives relative humidity on those levels. The output WPS files will have prefixes of

RH_2M_ABOVE_GROUND_LEVEL,RH_MODEL_LEVEL, and/orRH_ISOBARIC_LEVEL, and will end with the familiar$YYYY-$MM-$DD_$HHstring. Anamelist.createRHfile is also used as input. This program is recommended because some versions of REAL do not correctly interpolate specific humidity, and because the WRF definition of RH is strictly w.r.t. liquid while some versions of GEOS-5 output a weighted average of RT w.r.t. liquid and ice that is a function of temperature.

Extrapolation.

extrapIsobaric. A utility that reads in a WPS file with geopotential height, temperature, relative humidity, U and V winds all on isobaric levels and extrapolates to those levels that are underground. The RH, U, and V nearest the ground are simply copied downward, while a specified lapse rate is used for temperature and the hypsometric equation is used for geopotential height. The output WPS files will be called

ISOBARIC:$YYYY-$MM-$DD_$HHand will contain all the isobaric data (original data above ground, extrapolated data below ground.) Anamelist.extrapIsobaricfile is also used as input. This program is not necessary when processing GEOS-5 model-level data, since the GEOS-5 coordinate is terrain following. Users are advised to use the model-level data whenever possible.

Splitter utility.

splitWPS. A utility that reads in a WPS file and divides the data into new WPS files, each file containing a single 2D slab of data. The output WPS files will be called

$VARNAME_$LEVEL:$YYYY-$MM-$DD_$HH, where $LEVEL is the “level code” for the slab. The “level code” follows WPS convention: pressure levels are simply the pressure in Pa; model levels are the indices of the slice (“1” indicates model top in GEOS-5); ground level, 2-meter AGL, and 10-meter AGL are represented as “200100”; and the mean sea level is represented as “201300”. Anamelist.splitWPSis also used as input. This program is not required for preparing data for WPS, but instead allows breaking up a WPS file into individual fields for examination.

To proceed, the user must first compile the GEOS2WRF software with

./build.sh geos2wrf. The user must then review the GEOS-5 data

available to them and identify time slices and date/time stamps of

interest, and the variables that can be used as-is by WRF. WRF will

ultimately require the following fields on either isobaric or GEOS-5

model levels:

pressure;

geopotential height;

horizontal winds;

temperature; and

moisture (preferably relative humidity w.r.t. liquid).

Recommended fields that are useful for interpolating or extrapolating near the WRF model terrain level includes:

surface pressure;

sea level pressure;

land-sea mask;

sea-ice fraction;

2-m temperature;

2-m relative humidity;

10-m horizontal winds;

skin temperature; and

terrain height.

With this list in mind, the user must also identify GEOS-5 variables that can be used to derive other variables for WRF. From the utilities listed above, the following derivations can be made:

Surface geopotential can be used to derive terrain height (via createSOILHGT).

Lake fraction and ocean fraction can be used together to derive a land-sea table (via createLANDSEA).

Model layer pressure thicknesses can be used to derive model layer pressures (via createPRESSURE).

Model layer pressure thicknesses can also be used (with model layer temperatures, model layer specific humidity, and the model terrain field) to derive model layer geopotential heights (via createHGT).

Relative humidity on model levels, isobaric levels, and near ground level can be derived from model, isobaric, and 2-meter temperatures, model, isobaric, and 2-meter specific humidity, and model, isobaric, and surface pressure (via createRH).

Isobaric temperature, relative humidity, U and V winds can be extrapolated underground (via extrapISOBARIC).

After assembling the list of variables, the user should run GEOS2WPS

using a customized namelist.geos2wps for each GEOS-5 file. Execution

occurs with a simple ./geos2wps.x if in the current directory.

After extracting all the GEOS-5 variables, the user must employ the

necessary utilities to derive the remaining variables for WRF. The

appropriate namelist file (e.g., namelist.createHGT) must be

customized, and the user must use the UNIX cat command to collect

the relevant WPS files together. When ready, the user will execute by

typing the program name (e.g., ./createHGT).

The namelist.geos2wps file contains the following information:

Variable Names |

Description |

|---|---|

&files |

|

geosFileFormat |

Integer, specifies GEOS-5 file format HDF4=1, netCDF3 or netCDF4 = 2, HDFEOS2=4 |

geosFileName |

String, specifies GEOS-5 input file name to read. |

outputDirectory |

String, directory name to write WPS file. |

&coordinates |

|

longitudeName |

String, name of 1-D longitude array in GEOS-5 file. |

latitudeName |

String, name of 1-D latitude array in GEOS-5 file. |

hasVerticalDimension |

Logical, specifies whether data with vertical dimension are to be processed from GEOS-5 file. |

verticalName |

String, name of 1-D vertical coordinate array in GEOS-5 file. |

&forecast |

|

numberOfTimes |

Integer, number of time slices to process from GEOS-5 file. |

validTimes(:) |

Array of Strings, specifies valid time(s) of each time slice to process. Format is $YYYY-$MM-$DD_$HH. One array entry should exist for each time slice. |

timeIndices(:) |

Array of Integers, specifies time slice indices to process. One array entry should exist for each time slice. |

forecastHours(:) |

Array of Integers, specifies nominal forecast hour length for each processed time slice. One array entry should exist for each time slice. |

&variables |

|

numberOfVariables |

Integer, specifies total number of variables to process from the GEOS-5 file. |

variableRanks(:) |

Array of Integers, specifies the ranks (number of dimensions) for each GEOS-5 variable to process. Data of rank 3 are assumed to be organized as (lat,lon,time), while rank 4 data are assumed to be organized as (lat,lon,vert,time). One array entry should be assigned for each processed variable. |

variableLevelTypes(:) |

Array of Integers, specifies level type for each processed variable. One array entry should be assigned for each variable. = 1, ground level; = 2, 2-meters AGL; = 3, 10-meters AGL = 4, mean sea level; = 11, model level; = 12, isobaric level |

variableNamesIn(:) |

Array of Strings, specifies the name of each processed variable in GEOS-5 file. One array entry should be specified for each variable. |

variableNamesOut(:) |

Array of Strings, specifies the name of each processed variable as written in the WPS file. One array entry should be specified for each variable. Not that if a processed variable is intended for direct use by WPS (instead of use in deriving something else), the variableNamesOut entry should match that in

|

variableUnits(:) |

Array of Strings, specifies units of each processed GEOS-5 variable. One array entry should be specified for each variable. This is included because some GEOS-5 variables are known to be assigned the wrong units when output by the model. |

variableDescriptions(:) |

Array of Strings, gives short descriptions of each processed variable as written in the WPS file. One array entry should be specified for each variable. |

&subsetData |

|

subset |

Logical, specifies whether to process the entire GEOS-5 domain or to read and process a subset. |

iLonMin |

Integer, specifies minimum i (longitude) index of GEOS-5 grid to process. Only used if subset=.true. |

iLonMax |

Integer, specifies maximum i (longitude) index of GEOS-5 grid to process. Only used if subset=.true. |

jLatMin |

Integer, specifies minimum j (latitude) index of GEOS-5 grid to process. Only used if subset=.true. |

jLatMax |

Integer, specifies maximum j (latitude) index of GEOS-5 grid to process. Only used if subset=.true. |

kVertMin |

Integer, specifies minimum k (vertical) index of GEOS-5 grid to process. Only used if subset=.true. |

kVertMax |

Integer, specifies maximum k (vertical) index of GEOS-5 grid to process. Only used if subset=.true. |

The namelist.temporalInterpolation file contains the following

information:

Variable Names |

Description |

|---|---|

&all |

|

fieldName |

String, lists name of the variable to process. |

&input1 |

|

directory1 |

String, lists directory with WPS intermediate file. |

prefix1 |

String, lists prefix of the name of WPS intermediate file. |

year1 |

Integer, lists the valid year of the WPS intermediate file. |

month1 |

Integer, lists valid month of WPS intermediate file. |

day1 |

Integer, lists the valid day of the WPS intermediate file. |

hour1 |

Integer, lists valid hour of WPS intermediate file. |

&input2 |

|

directory2 |

String, lists directory with WPS intermediate file. |

prefix2 |

String, list prefix of the name of WPS intermediate file. |

year2 |

Integer, lists the valid year of the WPS intermediate file. |

month2 |

Integer, lists valid month of WPS intermediate file. |

day2 |

Integer, lists the valid day of the WPS intermediate file. |

hour2 |

Integer, lists valid hour of WPS intermediate file. |

&output |

|

directoryOutput |

String, lists directory with WPS intermediate file. |

prefixOutput |

String, lists prefix of the name of WPS intermediate file. |

yearOutput |

Integer, lists the valid year of the WPS intermediate file. |

monthOutput |

Integer, lists valid month of WPS intermediate file. |

dayOutput |

Integer, lists the valid day of WPS intermediate file. |

hourOutput |

Integer, lists valid hour of WPS intermediate file. |

The namelist.createSOILHGT file contains the following information:

Variable Names |

Description |

|---|---|

&input |

|

directory |

String, directory for input and output WPS files. |

prefix |

String, lists filename prefix of input WPS files. |

year |

Integer, lists valid year of WPS file. |

month |

Integer, lists valid month of WPS file. |

day |

Integer, lists valid day of WPS file. |

hour |

Integer, lists valid hour of WPS file. |

surfaceGeopotentialName |

String, name of the surface geopotential field in WPS file |

The namelist.createHGT file contains the following information:

Variable Names |

Description |

|---|---|

&input |

|

directory |

String, directory for input and output WPS files. |

prefix |

String, lists filename prefix of input WPS files. |

year |

Integer, lists the valid year of the WPS file. |

month |

Integer, lists valid month of WPS file. |

day |

Integer, lists the valid day of the WPS file. |

hour |

Integer, lists valid hour of the WPS file. |

layerPressureThicknessName |

String, name of pressure thickness variable between GEOS-5 model levels in the input WPS file. |

layerTemperatureName |

String, name of model layer temperatures in the input WPS file. |

layerSpecificHumidityName |

String, name of model layer specific humidity variable in the input WPS file. |

soilHeightName |

String, name of surface terrain variable in the input WPS file. |

modelTopPressure |

Real, air pressure (in PA) at the very top of GEOS-5 grid. For GEOS-5, this is typically 1 Pa (0.01 mb). |

The namelist.createLANDSEA file contains the following information:

Variable Names |

Description |

|---|---|

&input |

|

directory |

String, directory for input and output WPS files. |

prefix |

String, lists filename prefix of input WPS files. |

year |

Integer, lists the valid year of the WPS file. |

month |

Integer, lists valid month of WPS file. |

day |

Integer, lists the valid day of the WPS file. |

hour |

Integer, lists valid hour of the WPS file. |

lakeFractionName |

String, name of the GEOS-5 variable specifying fraction of grid point covered by lakes in the WPS input file. |

oceanFractionName |

String, GEOS-5 variable name specifying fraction of grid point covered by the ocean in the WPS input file. |

The namelist.createPRESSURE file contains the following information:

Variable Names |

Description |

|---|---|

&input |

|

directory |

String, directory for input and output WPS files. |

prefix |

String, lists filename prefix of input WPS files. |

year |

Integer, lists the valid year of the WPS file. |

month |

Integer, lists valid month of WPS file. |

day |

Integer, lists the valid day of the WPS file. |

hour |

Integer, lists valid hour of the WPS file. |

layerPressureThicknessName |

String, names variable with pressure thicknesses between GEOS model levels in the WPS input file. |

modelTopPressure |

Real, air pressure (in PA) at very top of GEOS-5 grid. For GEOS-5, this is typically 1 Pa (0.01 mb). |

The namelist.createRH file contains the following information:

Variable Names |

Descriptions |

|---|---|

&input |

|

directory |

String, lists directory for input and output WPS files |

prefix |

String, lists filename prefix of input WPS files. |

year |

Integer, lists the valid year of the WPS file. |

month |

Integer, lists valid month of WPS file. |

day |

Integer, lists the valid day of the WPS file. |

hour |

Integer, lists valid hour of the WPS file. |

processSurfacePressure |

Logical, indicates whether or not to read in surface pressure from the WPS input file. |

onIsobaricLevels |

Logical, indicates whether upper air levels are isobaric instead of model level. |

surfacePressureName |

String, name of surface pressure variable in WPS input file. Ignored if processSurfacePressure=.false. |

pressureName |

String, name of upper-level pressure fields in WPS input file. Ignored if onIsobaricLevels=.true. |

temperatureName |

String, name of temperature fields in WPS input file If 2-meter temperatures are included, then the surface pressure must also be supplied and processSurfacePressure must be set to .true. |

specificHumidityName |

String, name of specific humidity fields in WPS input file. If 2-meter specific humidities are included, then the surface pressure must also be supplied and processSurfacePressure must be set to .true. |

The namelist.extrapIsobaric file contains the following information:

Variable Names |

Description |

|---|---|

&input |

|

directory |

String, lists directory for input and output WPS files |

prefix |

String, lists filename prefix of input WPS files. |

year |

Integer, lists the valid year of the WPS file. |

month |

Integer, lists valid month of WPS file. |

day |

Integer, lists the valid day of the WPS file. |

hour |

Integer, lists valid hour of the WPS file. |

geopotentialHeightName |

String, name of isobaric geopotential height fields in the WPS input file. |

temperatureName |

String, name of isobaric temperature fields in the WPS file. |

relativeHumidityName |

String, name of isobaric relative humidities in the WPS input file. |

uName |

String, name of isobaric zonal wind field in WPS input file. |

vName |

String, name of isobaric meridional wind field in WPS input file. |

The namelist.splitWPS file contains the following information:

Variable Names |

Description |

|---|---|

&input |

|

directory |

String, lists directory for input and output WPS files. |

prefix |

String, lists filename prefix of input WPS files. |

year |

Integer, lists the valid year of the WPS file. |

month |

Integer, lists valid month of WPS file. |

day |

Integer, lists the valid day of the WPS file. |

hour |

Integer, lists valid hour of the WPS file. |

A sample script

(utils/geos2wrf/scripts/merra/run_geos2wrf_merra2_3hrassim.sh) is

available to use GEOS2WRF to process 3-hourly MERRA-2 assimilation data.

These data are output from GEOS-5 when the global model is adjusting

toward a MERRA-2 6-hourly analysis via Incremental Analysis Updates [see

section 4.2 of Rienecker et al. (2008)]. This script will run

GEOS2WPS, createLANDSEA, createSOILHGT, and createRH to process the data. To

run, the user must edit the accompanying config.discover.sh file to set

the path to the NU-WRF code, the work directory, and the modules used to

compile GEOS2WRF; then, the run_geos2wrf_merra2_3hrassim.sh should

be modified to specify the start and end dates and hours to process.

(Users who wish to use 6-hourly MERRA or MERRA-2 data can use either

GEOS2WRF or MERRA2WRF; however, the 3-hourly MERRA-2 data can only be

processed with GEOS2WRF.)

MERRA2WRF

MERRA2WRF is a monolithic program customized to process the 6-hourly reanalyses from MERRA and MERRA-2. It was first developed for MERRA under the assumption that the archived data files (called “collections” in GEOS-5 terminology) would be permanent, making it possible to build a single robust preprocessing tool. Recently support was added for 6-hourly MERRA-2 fields; however, 3-hourly MERRA-2 processing is not possible due to significant differences in the data collections (users must fall back to GEOS2WRF for these 3-hourly data).

MERRA and MERRA-2 files are accessible from the NASA GES DISC web page

(http://disc.sci.gsfc.nasa.gov), and are available to the general

public. MERRA-2 files are also accessible on the NASA Discover

supercomputer in

/discover/nobackup/projects/gmao/merra2/merra2/scratch/

but are only available to select users authorized by the GMAO.

MERRA2WRF is compiled when running ./build.sh geos2wrf. The

following files must then be gathered from the MERRA or MERRA-2

datasets:

const_2d_asm_Nx (in HDFEOS2 or NETCDF format):

’XDim’ or ’lon’ (longitude)

’YDim’ or ’lat’ (latitude)

’PHIS’ (surface geopotential)

’FRLAKE’ (lake fraction)

’FROCEAN’ (ocean fraction)

inst6_3d_ana_Nv (variable names are HDF4 or netCDF or HDFEOS2):

’longitude’ or ’XDim’ or ’lon’ (longitude)

’latitude’ or ’YDim’ or ’lat’ (latitude)

’time’ or ’TIME:EOSGRID’ or ’TIME’ (synoptic hour)

’levels’ or ’Height’ or ’lev’ (nominal pressure for each model level)

’ps’ or ’PS’ (surface pressure)

’delp’ or ’DELP’ (layer pressure thicknesses)

’t’ or ’T’ (layer temperature)

’u’ or ’U’ (layer eastward wind)

’v’ or ’V’ (layer northward wind)

’qv’ or ’QV’ (layer specific humidities)

inst6_3d_ana_Np (variable names are HDF4 or netCDF or HDFEOS2):

’longitude’ or ’XDim’ or ’lon’ (longitude)

’latitude’ or ’YDim’ or ’lat’ (latitude)

’time’ or ’TIME:EOSGRID’ or ’TIME’ (synoptic hours)

’slp’ or ’SLP’ (sea level pressure)

tavg1_2d_slv_Nx (variable names are HDF4 or netCDF or HDFEOS2):

’longitude’ or ’XDim’ or ’lon’ (longitude)

’latitude’ or ’YDim’ or ’lat’ (latitude)

’time’ or ’TIME:EOSGRID’ or ’TIME’ (synoptic hours)

’u10m’ or ’U10M’ (10-meter eastward wind)

’v10m’ or ’V10M’ (10-meter northward wind)

’t2m’ or ’T2M’ (2-meter temperature)

’qv2m’ or ’QV2M’ (2-meter specific humidity)

’ts’ or ’TS’ (skin temperature)

tavg1_2d_ocn_Nx (variable names are HDF4/netCDF or HDFEOS2):

’longitude’ or ’XDim’ or ’lon’ (longitude)

’latitude’ or ’YDim’ or ’lat’ (latitude)

’time’ or ’TIME:EOSGRID’ or ’TIME’ (synoptic hours)

’frseaice’ or ’FRSEAICE’ (sea ice fraction)

tavg1_2d_lnd_Nx (variable names are HDF4/netCDF or HDFEOS2):

’longitude’ or ’XDim’ or ’lon’ (longitude)

’latitude’ or ’YDim’ or ’lat’ (latitude)

’time’ or ’TIME:EOSGRID’ or ’TIME’ (synoptic hours)

Note that the tavg1_2d_*_Nx collections are 1-hour averages that are valid at the bottom of the hour. For simplicity, MERRA2WRF uses the 00:30Z average data with the 00Z instantaneous fields, the 06:30Z average data with the 06Z instantaneous fields, and so on.

To download the MERRA data and run MERRA2WRF for a specified start date and end date go to scripts/python/utils and run merra2wrf.py using the command:

./merra2wrf.py StartDate EndDate -o OutputDir -n NUWRFDIR.

For example:

./merra2wrf.py 20150711 20150712 -n <NUWRFDIR>

Start MERRA2WRF conversion...

start_time: 20150711

end_time: 20150712

out_dir: ./

nuwrf_dir: <NUWRFDIR>

date: 2015 07 11

getting files...

running merra2wrf...

date: 2015 07 12

getting files...

running merra2wrf...

Time taken = 110.273767

./merra2wrf.py is DONE.

A namelist file will be created for each processing date, and files readable for METGRID will be generated in a data directory under out_dir.

To use MERRA-2 reanalysis, the user may download MERRA-2 files from the GES DISC web page and process them with MERRA2WRF got to the utils/geos2wrf/scripts/run_merra directory and run:

./proc_merra2_ges_disc.sh StartDate EndDate RunDir NUWRFDIR

A third alternative is to customize namelist.merra2wrf by hand to

process the selected MERRA files and namelist.merra2_2wrf to process

the selected MERRA-2 files (both files are in utils/geos2wrf/namelist).

The namelist files consist of a single block:

Variable Names |

Description |

|---|---|

&input |

|

outputDirectory |

String, lists directory for writing WPS output files. |

merraDirectory |

String, lists directory containing MERRA or MERRA-2 input files. |

merraFormat_const_2d_asm_Nx |

Integer, specifies format of const_2d_asm_Nx file. 1=HDF4, 2=netCDF, 4=HDFEOS2. |

merraFile_const_2d_asm_Nx |

String, name of const_2d_asm_Nx file. |

numberOfDays |

Integer, lists number of days to process. Each MERRA or MERRA-2 collection (excluding const_2d_asm_Nx) will have one file per day. |

merraDates(:) |

Array of strings, list each day to be processed (format is YYYY-MM-DD). |

merraFormat_inst6_3d_ana_Nv |

Integer, specifies format of inst6_3d_ana_Nv files. 1=HDF4, 2=netCDF, 4=HDFEOS2. |

merraFiles_inst6_3d_ana_Nv(:) |

Array of strings, specifying names of inst6_3d_ana_Nv files. |

merraFormat_inst6_3d_ana_Np |

Integer, specifies format of inst6_3d_ana_Np files. 1=HDF4, 2=netCDF, 4=HDFEOS2. |

merraFiles_inst6_3d_ana_Np(:) |

Array of strings, specifying names of inst6_3d_ana_Np files. |

merraFormat_tavg1_2d_slv_Nx |

Integer, specifies format of tavg1_2d_slv_Nx files. 1=HDF4, 2=netCDF, 4=HDFEOS2. |

merraFiles_tavg1_2d_slv_Nx(:) |

Array of strings, specifying names of tavg1_2d_slv_Nx files. |

merraFormat_tavg1_2d_ocn_Nx |

Integer, specifies format of tavg1_2d_ocn_Nx files. 1=HDF4, 2=netCDF, 4=HDFEOS2. |

merraFiles_tavg1_2d_ocn_Nx(:) |

Array of strings, specifying names of tavg1_2d_ocn_Nx files. |

The software is run by typing ./merra2wrf.x namelist.merra2wrf.

The output files will be named MERRA:$YYYY-$MM-$DD_$HH, where

$YYYY is the four-digit year, $MM is the two-digit month, $DD is the

two-digit day, and $HH is the two-digit hour. These files are readable

by METGRID.

Use of New Erodible Soil Options

NU-WRF includes several new options for specifying erodible soil (EROD) for

dust emissions (Kim, Kemp, and Chin 2014). The workflow depends

a bit on the particular option selected but requires compilation of

WRF-Chem and WPS (./build.sh chem,wps if using normal chemistry, or

./build.sh kpp,wps if using KPP chemistry).

The four available EROD options are:

EROD_STATIC. This is the EROD option inherited from the community WRF. Annual EROD at 0.25 deg resolution for sand, silt, and clay is processed and fed to WRF-Chem.

EROD_MDB. This is a new seasonal EROD dataset derived from MODIS-Deep Blue climatological aerosol products. [See Ginoux et al. (2012) and Ginoux et al. (2010) for description of estimating frequency of occurrance of optical depth – these are converted to EROD]. Data are subdivided into three groups (for sand, silt, and clay) at 0.1 deg resolution for four meteorological seasons (December-January-February, March-April-May, June-July-August, September-October-November). These are processed and passed to WRF-Chem.

EROD_DYN_CLIMO. This is a new “dynamic climatological” EROD option. It uses a monthly surface bareness field derived at 30-arc second resolution from the community WRF climatological MODIS vegetation fraction dataset (

greenness_fpar_modis/), with adjustments from the community WRF’s soil type and MODIS and USGS land use datasets to screen out water bodies. It also uses a 30-arc second topographic depression dataset derived from the community WRF’s terrain dataset. These fields are passed to WRF-Chem, which will create an instantaneous EROD field from these variables with adjustments to screen out snowy or very cold locations.EROD_DYN. This is a new “dynamical” EROD option. It uses the same topographic depression field as EROD_DYN_CLIMO, plus a surface bareness field based on either NASA GIMMS or NASA SPoRT daily NDVI products. The data are passed to WRF-Chem, which will construct an instantaneous EROD field from the bareness and topographic depression with adjustments to screen out snowy or very cold locations.

A sample workflow for EROD is presented here:

- Assemble GEOG data. Several new EROD-related fields must be obtained from the NU-WRF group and placed in subdirectories with the standard GEOG data available from the community WRF. For EROD_MDB, they are